Background Agents: AI for Work

Reflections from my recent fireside chat with the CEO of Wordware at GenAI week.

For decades knowledge work has been single‑threaded: open an app, issue a command, wait. Background AI agents break that bottleneck by letting dozens of task‑specific models run in parallel, each quietly advancing your goals while you sip coffee.

We see the prototype of this future in software engineering today. Tools like Devin spin up isolated sandboxes, write code, and open pull‑requests overnight. Founders I back now spend more time curating task lists and reviewing diffs than typing functions. They have effectively graduated from developer to project manager of 30 ever‑improving interns.

Expect those practices to permeate every corner of knowledge work. Sales teams will launch lead‑qual agents after hours; lawyers will wake up to red‑lined leases; recruiters will arrive to pre‑screened candidate pools, each with a dashboard for quick approve / reject / tweak loops.

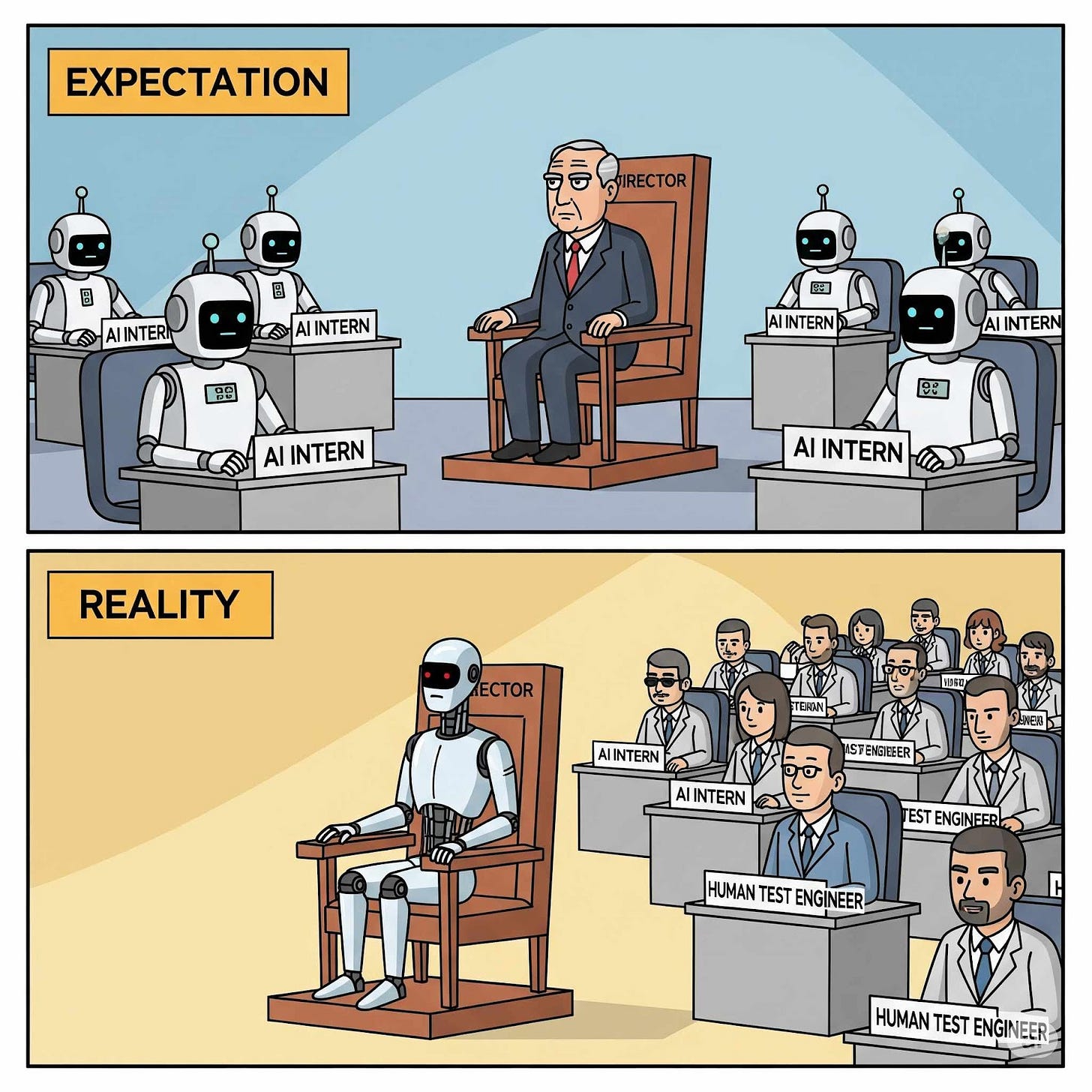

Credit to Yaroslav Bulatov for the image generation. While we are using background agents, the AI gets better because of the work we do. Human feedback is key for AI improvement.

The Human Loop: Approval, Taste & Trust

Full autonomy is sexy on stage, but partial automation wins in production. People are too hung up on "demos" of full AI automation and are missing the real opportunities around partial automation. By design, high‑impact agents:

Ask for permission where confidence is low.

Learn your taste from each correction.

Surface rationale so you’re never flying blind.

That feedback cycle - AI drafts, human edits, AI retrains - shrinks turnaround time without surrendering control. The result feels less like delegating to a black box and more like wielding a super‑powerful co‑pilot.

Injecting Intelligence - Without Driving Users Crazy

Picture any long‑form text that’s been blasted through a generic machine‑translation engine: the gist lands but idioms fall flat and authorial voice gets sanded off. A smarter agent could draft 90 % of the work, then let a bilingual editor define phrase‑class rules ("use translation A for this idiom, translation B for that one"). Those preferences ripple across the entire book, audiobook script, subtitle file, or support knowledge‑base, AI does the heavy lifting while the human applies nuance. The interaction model between user and AI is going to be a subtle thing. Software should provide the power in the right places and with the right controls.

For high‑stakes domains, pair your doing‑agent with a reviewing‑agent, an LLM trained on policy, tone, or legal constraints, to catch errors before a human sees them. “Delegate but verify” scales trust. Design becomes a dialogue: the system proposes, the human disposes, the model improves, and annoyance turns into delight.

What Great Founders Must Still Do

Yes, everyone now has access to the same foundation models. Differentiation shifts to:

Novel UX: delighting users with invisible AI until the moment it matters.

Data capture & context: owning the ingestion layer (email, Slack, calls) and the actions on top—graduating systems of record into systems of action.

Audacious vision: being just delusional enough to ship a demo that feels like science fiction and then scaling it.

Case‑Studies in the Wild

Below are four snapshots that surfaced during the panel, each illustrating how delusional audacity plus pragmatic agent design can bend reality:

1. Filip & Wordware

Wordware’s team deliberately chose a slightly “sub‑optimal but AI‑legible” stack so agents can maintain code faster than humans ever could. Filip now manages 30 parallel agents like interns: they write PRs overnight, he reviews a dashboard at breakfast, and an agent‑tester flags regressions before code merges. The result is speed that feels unfair.

2. Background Agents for Therapy & Culture

Filip records every therapy session and dumps transcripts into a private context window. A custom agent surfaces recurring themes and coaching prompts—giving him what he calls “billionaire‑level mental health support” on demand. Inside his startup he runs a Slack‑scraper agent that compares messages and Linear tickets against quarterly goals, then pings him about alignment gaps or even brewing interpersonal conflicts.

3. Lease‑Lawyer Agents: Flat‑Fee Review at Machine Speed

A boutique real‑estate attorney exported years of redlines into a structured Google Sheet, then wired an agent to apply those rules to fresh documents. Now the AI reviews massive commercial leases overnight; the lawyer spends 30 minutes spot‑checking and emails the client a polished memo. Hourly billing felt dishonest, so she flipped to a per‑lease flat fee, pocketing higher margins while clients get next‑day turnaround. A textbook example of agents compressing intent and widening access to expert services.

4. Lovable & ElevenLabs: Winning with Audacity

Lovable should have been swallowed by Wix; ElevenLabs by the big speech labs. Both survived, and are thriving, because founders shipped jaw‑dropping demos long before the incumbents moved. Their edge isn’t raw model power; it’s risky UX choices that users fell in love with.

5. Granola.ai: Data, Context, and the Long Game

Granola gives meeting transcription away at near‑zero margin, quietly building the richest corpus of conversational work data. The obvious play is an agent layer on top—turning those transcripts into automatic summaries, task extraction, even follow‑up emails. Owning the ingestion layer first positions them to evolve from “system of record” to system of action.

A Day in 2028

Picture a typical San Francisco operator:

Morning walk: earbuds in, personal agent summarizes yesterday, proposes priorities, books a follow‑up brunch.

Deep‑work block: collaborates with a large model like Claude to develop ideas, then approves agent‑written memos for distribution.

Reactive hour: 5‑6 p.m. triage of only escalated items; everything else already handled or scheduled.

For remote workers the cadence flexes further: a few bursts of approvals might suffice to run an entire side business from Bali.

Job Security, Skills & the Email Every 6 Minutes Problem

Jobs don’t vanish wholesale; tasks do. Data entry, rote search, and endless inbox grazing (the average knowledge worker checks email every six minutes) will look like manual farming to our kids. Skills that rise in value:

Agent orchestration and prompt design

Domain taste & judgment

Emotional intelligence, conflict mediation beats spreadsheet wrangling

Closing Thought

Background agents aren’t merely a productivity hack; they’re a new canvas for human creativity. The sooner we craft them to respect our attention, learn our preferences, and surface only the truly important, the sooner work transforms from reactive slog to intentional craft.

Let’s build that future, and invest in the founders bold enough to paint it.

If you are a founder thinking about AI for Work, please reach out, I’d love to meet you even at the chain-of-thought phase. It’s an invitation to a conversation vs a pitch.

If you like this essay, consider sharing with a friend or community that may enjoy it too.

More essays: Founder Mental Software, The Unfiltered Truth About Early-Stage M&A, Agentic systems: panning for gold, AI Engineer World’s Fair 2025 - Field Notes

Great read, the System of Record => System of Action paradigm shift is spot on.

On Deep-work block vs Reactive hour, if you have managed to spend most of your time in deep-work vs reactive, you're already doing something great!