AI Engineer World’s Fair 2025 - Field Notes

(San Francisco • 3-5 June 2025 • ≈3 000 attendees • 18 parallel tracks)

This week’s AI Engineer World’s Fair filled two floors of the SF Marriott Marquis with ~3 000 builders, Fortune-500 CTOs and the entire “LLM-OS” supply chain — from the biggest AI Labs to the tiniest plug-in author. I volunteered, scanned 318 badges, and got to meet the most interesting founders. With an eye on the scanner and a ear at the conference, here are my 10 takeaways from manning the keynote door.

1. Engineering Process Is the New Product Moat

Template everything. PRDs, design docs (ADRs), RCAs, quarterly roadmaps, and recurring meeting agendas should live in shared templates with automatic transcription. Standardisation keeps engineering and product aligned, accelerates onboarding, and surfaces best-practice gaps early.

Plan with AI, not by AI. Use models to mine context, surface constraints, and draft alternatives—then humans decide. Three-phase loop: Gather context → Collaborative discovery → Polish & visualise. AI cuts overhead but strategic intent still requires human judgment.

Documentation as context for agents. Up-to-date specs unlock agentic coding and automated doc-linting (detect out-of-sync docs, missing requirements, inconsistency). Coding agents perform measurably better when fed live system context.

2. Quality Economics Haven’t Changed—Only the Tooling

“Higher quality = fewer defects.” The classic cost-of-defect curve still rules: a bug fixed in prod costs 30-100× the same bug caught at the requirements stage.

Prevention stack for AI-generated code:

Learn existing solutions & patterns

Plan before you prompt

Write specs & strict style guides

Detect: static linters → unit/integration tests → LLM-based code review

Testing guidance: write happy + unhappy paths, emphasise integration tests, run in sandboxes, measure coverage.

Bottom line: Rapid LLM productivity must be paired with classic software craftsmanship or you simply ship defects faster.

3. Four Moving Frontiers in the LLM Stack

Speakers framed every model decision as a live trade-off across four axes:

Reasoning vs Non-reasoning – deeper thinking adds ~9× latency.

Open weights vs Proprietary – open models have closed most of the IQ gap since late 2024; Chinese labs (DeepSeek, Alibaba) currently lead the open frontier.

Cost – >500× spread to run the same benchmark today.

Speed – output tokens per second keep climbing via sparsity, smaller models, and inference-optimised software.

Takeaway: Winning platforms will treat model choice as continuous supply-chain optimisation, not a one-off vendor bet.

4. Efficiency Gains vs Run-Time Demand

GPT-4-level intelligence is now ~100× cheaper than its 2023 debut (smaller models ✕ FlashAttention ✕ new GPUs).

Yet typical compute per user request is up 20× because: larger context windows, reasoning models emit ~10× more tokens, and agentic workflows chain multiple calls.

The infra opportunity: products that arbitrate price/perf trade-offs in real time and hide complexity from app teams.

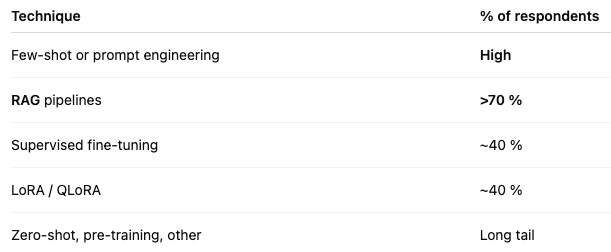

5. How Builders Are Customising Models (Survey Data)

It was shocking to me, the amount of fine tuning that people do. If you want a recap of all the different methods, please see my writeup - Fine Tuning LLMs - learnings from the DeepLearning SF Meetup.

Audio models show the highest intent-to-adopt of any modality—pointing to an emerging wave of speech and voice products.

6. Autonomy ≠ Replacement — Lessons From Claude-at-Work

One-third first-try success. Fully autonomous PRs land cleanly ≈ 33 % of the time; the rest need human nudges.

Guard-rails, not hand-offs. Every repo ships a

Claude.md(APIs + domain vocab); tests are scaffolded before generation; commits are tiny, checkpoint-heavy bursts for instant rollback.Practical takeaway: treat the model as a junior pair-programmer—speed comes from codified context plus fast undo, not from chasing 100 % autonomy.

Bottom line: Autonomy is a power-up, not a drop-in replacement; invest in context and recovery paths.

7. Jevons Paradox Hits AI Compute

Unit cost plunges… GPT-4-class reasoning is ~100 × cheaper than in 2023 (smaller models ✕ FlashAttention ✕ new silicon).

…but aggregate demand soars. Agents chain calls, context windows balloon, token outputs jump 10 ×, driving total compute per workflow up ~20 ×.

Strategic implication: durable moats sit in semantic layers—ontologies & knowledge graphs—that turn cheap generic tokens into domain-specific value.

Bottom line: Falling token prices won’t lower bills; whoever owns the semantic context captures the surplus.

8. Evals Are the New CI/CD — and Feel Wrong at First

Why it’s counter-intuitive. Seasoned engineers learn to avoid heavy eval suites for deterministic APIs—manual laptop tests catch almost everything, and “test-in-prod” is usually cheaper. That habit breeds an instinctive aversion to evals.

Why LLM systems flip the logic. Generative pipelines are stochastic and high-dimensional; manual poking can’t approximate real-world variability. Evals for LLMs play the same role manual smoke-testing once did for REST endpoints: they’re the only sane gate before users see nonsense.

Step-wise evaluators. Borrow Hex’s pattern: attach a pass/fail check to every hop—retrieval, planning, tool call, final answer—so errors localise instead of compounding (30 % slip per step becomes 90 % at the tail).

Execution rule: if you wouldn’t merge without unit tests, don’t deploy an LLM chain without per-step evals and a drift dashboard.

Bottom line: Evals feel like overhead if you came up on deterministic software, but for generative systems they’re the unit tests of cognition—skip them and your agent is a lottery ticket.

9. Semantic Layers — Context Is the True Compute

Why they matter. As I argued in “Are Semantic Layers the treasure map for LLMs?”, a well-curated ontology (tables, APIs, domain vocab) is the difference between token spit-out and task completion. Once open-weights IQ converges, the layer that maps model output to business truth becomes the moat.

Signals from the floor.

“Template everything” &

Claude.mdfiles are mini semantic layers—they encode the functions, objects and style rules Claude must respect.DeepSeek & Alibaba close the model-quality gap, proving that context beats proprietary weights.

Hex’s per-step evaluators work because each node in the DAG has a typed contract; without that semantic scaffold, evals would grade noise.

Real-world stakes. In healthcare we ground prompts in RxNorm so an agent knows all statins, not just “Lipitor.” Autonomous driving teams fuse multiple low-probability detections (child, bike, box) into a single avoid class—another ontology in action.

Investment lens. Semantic-layer tooling (version-controlled vocab, vector-friendly joins, RAG-ready graphs) and vertical apps that own proprietary context will capture more value than yet-another generic agent.

Bottom line: Cheap tokens are plentiful; structured meaning is scarce. Own the semantic layer and you set the terms for every model, eval and agent that sits above it.

10. Strategic Implications for Investors, LPs & Founders

Process maturity around docs, RCAs, and planning is becoming a due-diligence item for enterprise buyers.

Cost-engineering (latency, $$, carbon) is an investable layer: expect “FinOps for LLMs” tools to proliferate.

Data + workflow integration, not model IP, will anchor defensibility as open-weights intelligence converges on proprietary leaders.

Parameter-efficient customisation (RAG, LoRA) wins practical adoption today; bets on full-stack pre-training should factor longer payback.

Modal expansion (audio, video) is the next demand curve—founders building infra or primitives here look early but timely.

Building in that direction? Let’s chat. Also let me know which topic would you like to see a deep dive on. Leave in comments!

If you like this essay, consider sharing with a friend or community that may enjoy it too.

Spot on re RAG and workflow > model IP. We're applying it in HVAC field —where techs still fly blind on faults and fixes. AI to help them skip the ‘what’s broken’ phase and get straight to the fix. Less guesswork, fewer truck rolls, faster uptime.

thanks for sharing this!!! Point 8 and 9 spot on